Designing Blueberry's AI inbox so operators trust automation and turn comments into conversion moments.

ROLE

Product Designer

TEAM

Lauren Liang

Keiko Kobayashi

Shania Chacon

Valerie Peng

TIMELINE

2025 · 10 Weeks

TOOLS

Figma

V0

Prototyping

context

Every unanswered comment is a lost sale

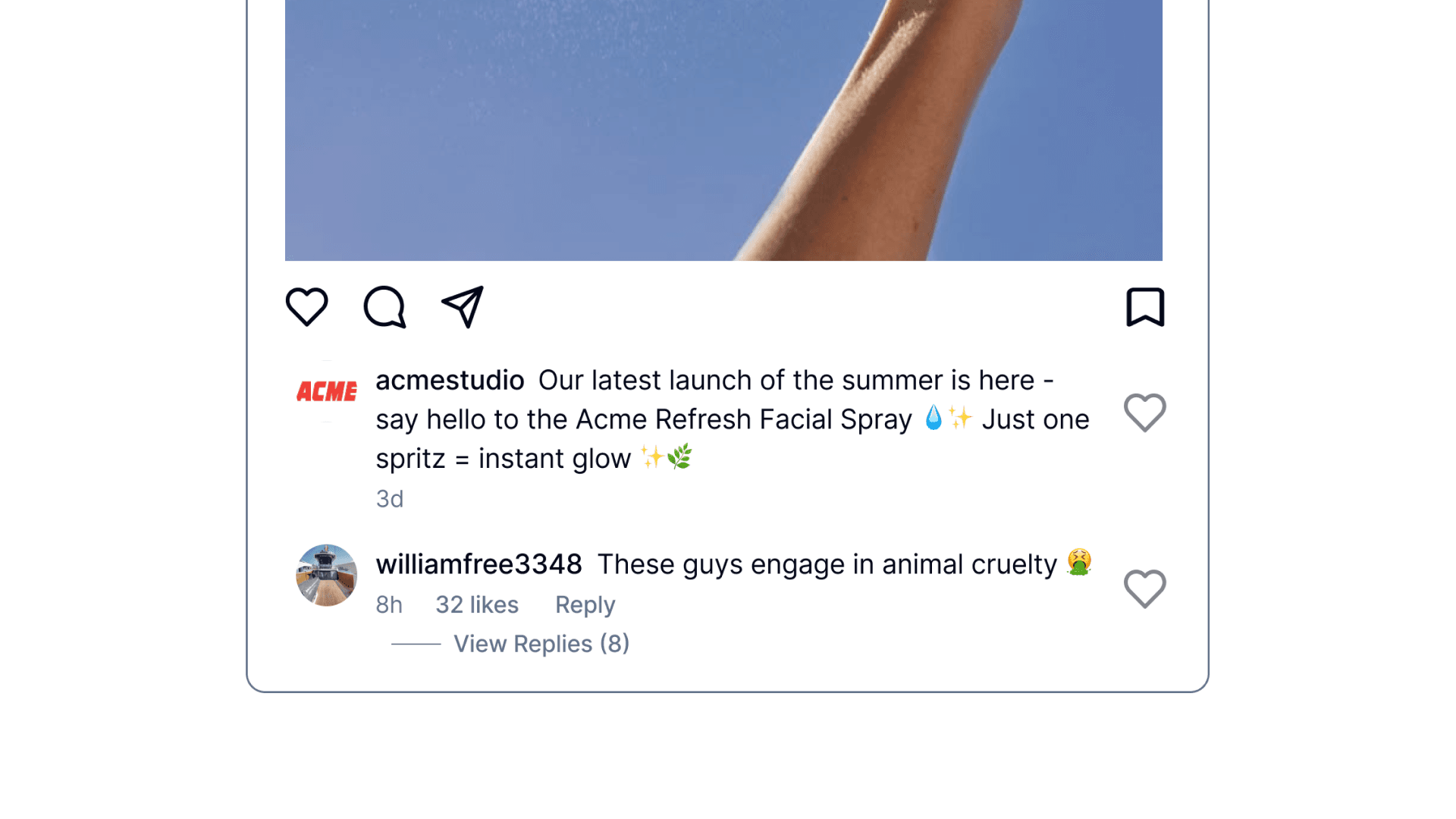

It's 11 PM. Maya, a growth lead at a $3M skincare brand, watches a customer ask if her best-seller is back in stock. 47 people are watching. By morning, 12 bought from a competitor who replied first. Maya faces this daily: hundreds of comments across Instagram, Facebook, and TikTok. She's tried hiring more, building internal tools, experimenting with AI. Nothing sticks. AI tools sound robotic. Internal tools can't keep up.

so what?

This isn't a feature problem. It's a trust problem.

We interviewed growth leads at mid-sized eCommerce brands. Every one had tried AI tools. Every one had stopped. Not because of capability. Because of fear. The pattern: AI adoption fails when operators can't see or control what it does.

“Don't fully trust AI yet, but open to automation once I trust it over time.”

— Growth Lead, Mid-sized eCommerce Brand

process

The pivot that changed everything

Halfway through, everything changed. We'd spent weeks building for startup founders. Then the co-founders came back with new data: mid-sized eCommerce brands were the real opportunity. Our entire ICP flipped. Instead of solo entrepreneurs, we were designing for growth teams at brands doing millions in revenue. We had a choice: panic or adapt. We chose speed. V0 for rapid prototypes. Weekly testing sessions. The goal wasn't perfection. It was reducing ambiguity fast enough to ship something valuable.

Assumed

Startup founders

Solo operators, low volume, price-sensitive

Discovered

Mid-sized eComm teams

$2M+ revenue, high volume, trust-focused

Pivoted to

Trust-first automation

Visibility, control, reversibility

design principle

AI assists first. Automates only when trusted.

This became our north star. Every feature had to let humans see what AI was doing, understand why, and override it instantly. No black boxes. No "magic." We rejected directions that violated this principle. Each would have shipped faster. Each would have failed in the market.

my focus

I owned rules, brand voice, and the final inbox

While my teammates tackled onboarding and early inbox explorations, I focused on the features that would make or break trust: the automation rules system, brand voice configuration, and bringing the inbox to its final form. These were the highest-risk areas because they determined whether users would actually flip the switch on automation.

what I rejected

Early explorations that didn't work

Two directions I explored and abandoned based on user feedback.

Rules that felt like programming

My first automation designs looked like logic builders: if/then statements, nested conditions, technical syntax. Users froze. “Rules look a bit intimidating,” one participant told us. I was designing for flexibility when I should have been designing for confidence.

Brand voice: real-time testing split attention

I designed a split interface with voice guidelines on the left and live testing on the right. The hypothesis: real-time feedback builds confidence faster. Users got stuck—constant context-switching between typing traits and checking output.

what shipped

Automation: plain language over technical logic

I borrowed from Apple Shortcuts: rules that read like sentences, not code. "When someone asks about shipping, reply with tracking info." A dedicated builder page replaced cramped modals. Mandatory sandbox testing before going live. Teams could experiment knowing they could always revert. The goal: make automation feel reversible, not permanent.

Brand voice: natural language over rigid controls

Users adjust how the AI responds through natural language prompts. Describe the tone you want, preview how responses might sound. No rigid sliders or dropdown menus. The preview lets users experiment before committing. Given more time, I would have added reasoning behind specific word choices to show users why the AI picked certain phrases. But even without that, the ability to preview and adjust built enough confidence that one user asked "Can you save multiple brand voices?"

The final inbox: scan fast, reply with context

Rows stay collapsed for fast scanning. Click to expand: full comment thread, original post, AI draft with reasoning—all in one view. No tab switching. No split screens. One participant rated it 6.5/7: "Easy to navigate. Each one has good buttons around each section."

impact

The numbers that matter

Over 10 weeks and 6 testing iterations, we moved the metrics that matter. One participant summarized: "Super easy and it took exactly what you needed." By the end, users weren't just approving AI suggestions. They were asking how to scale them.

60 → 80

SUS Score

33% improvement

72.5

Avg SUS

Above 68 benchmark

7/7

Ease Rating

Final onboarding

6

Test Rounds

Weekly iterations

reflection

What this project taught me

Designing the rules and brand voice systems taught me that trust is earned in small moments, not big features. Every decision I made came back to one question: can the user see what's happening and undo it if needed? The best AI tools don't replace humans. They make human judgment faster and more confident. That principle will shape every AI product I work on.

what's next

Securing the position, then expanding

The MVP shipped. Now the product roadmap focuses on entrenching value before expanding scope. Each phase builds on the trust foundation we established.

Sequential rules system

Clearer automation setup for teams to define and understand their logic

DM functionality

Handle private conversations alongside public comments

Strategic dashboard

Sentiment trends and engagement analytics

let's talk

This case study is the highlight reel

The real story has more texture: failed prototypes, scope debates, the moment we almost shipped something that would have tanked trust. If you're building AI products and navigating similar trade-offs, I'd love to compare notes. If you're hiring and want to see how I think through ambiguous problems, let's talk.